NENLP is a meeting series for academic and industry NLP researchers that live and work in New England

Accepted to COLING 2020

This workshop invites both practical and theoretical unexpected or negative results that have important implications for future research, highlight methodological issues with existing approaches, and/or point out pervasive misunderstandings or bad practices. In particular, the most successful NLP models currently rely on different kinds of pretrained meaning representations (from word embeddings to Transformer-based models like BERT). To complement all the success stories, it would be insightful to see where and possibly why they fail. Any NLP tasks are welcome: sequence labeling, question answering, inference, dialogue, machine translation - you name it.

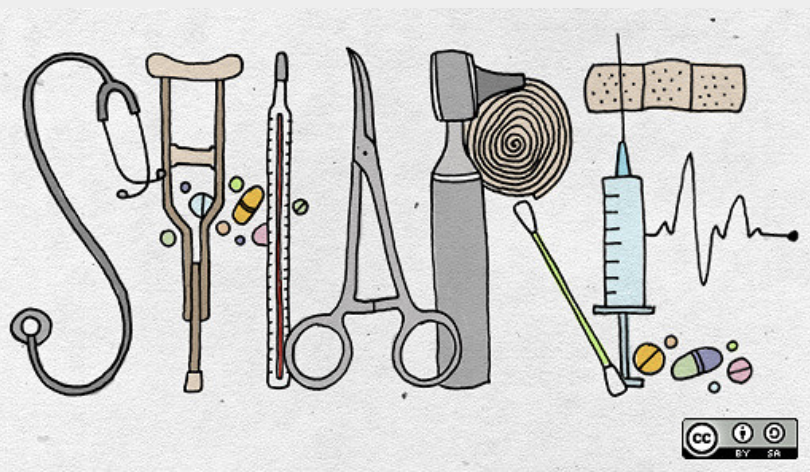

Clinical NLP workshop series is a series of workshops that address the unique challenges of dealing with clinical narrative from the electronic health records. Doctor's and nursing notes, radiology reports, discharge summaries, and other clinical narrative notes contain unique information about disease trajectories, patient outcomes, drug side effects, and other related issues. The goal of Clinical NLP is develop in-domain methods to utilize this information.

Clinical NLP workshops are co-located with the main CL venues, and their aim is to encourage general-domain NLP researchers to get involved, bringing their expertise to this exciting field. Here is the list of workshops in this series held to date:

We organized a shared task on normalization of clinical concepts in provider notes to standardized vocabularies. This task was originally scheduled to run at the 2019 SemEval as Task 11, but was delayed due to the data access issues. It has now run in the Spring of 2019 as an i2b2 spin-off shared task, with results presented at a N2C2 workshop collocated with AMIA 2019.

Check out our task video here: https://www.youtube.com/watch?v=uYZTqYxo9AU

The third edition of RepEval aims to support the search for high-quality general purpose representation learning techniques for NLP. We hope to encourage interdisciplinary dialogue by welcoming diverse perspectives on the above issues: submissions may focus on properties of embedding space, performance analysis for various downstream tasks, as well as approaches based on linguistic and psychological data. In particular, experts from the latter fields are encouraged to contribute analysis of claims previously made in NLP community.

This tutorial covered the current proposals for representation and interpretation of semantic features in word-level word embeddings, representation of morphological information, building sentence representations and encoding abstract linguistic structures that are necessary for grammar but hard to capture distributionally. For each problem we discussed the existing evaluation datasets and ways to improve them.

This task aimed to stimulate the development of novel methods of humour detection that would not treat humor as a binary variable, and also take into account its subjectivity. The shared task was run with a new dataset based on humorous responses submitted to a Comedy Central TV show.

This tutorial provided a detailed introduction to Deep Semantic Annotation, and practical guidance for decomposition of complex deep tasks for their annotation with Mechanical Turk.